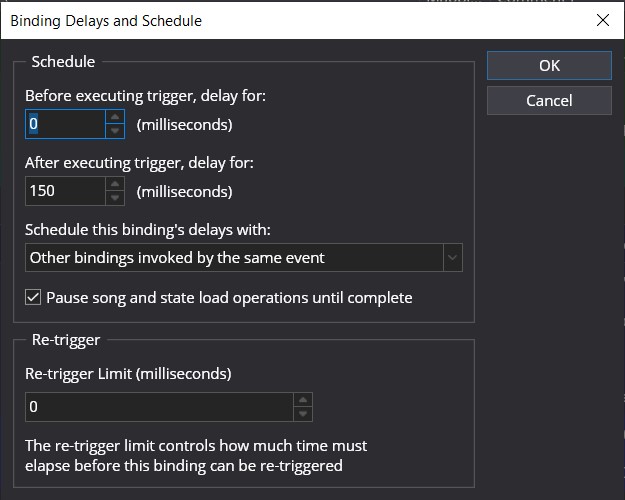

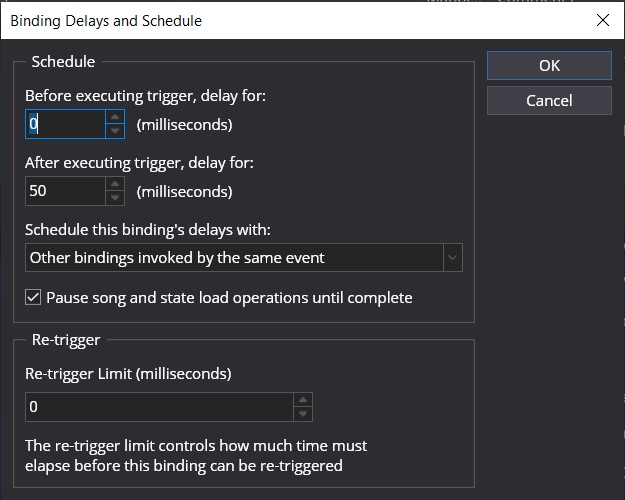

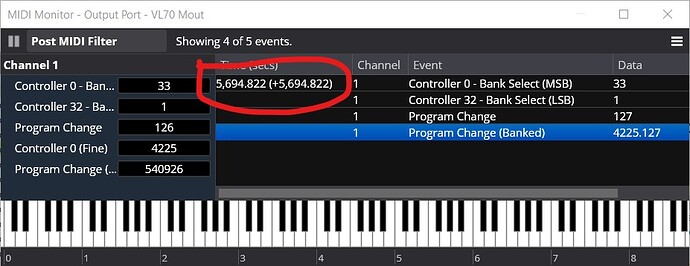

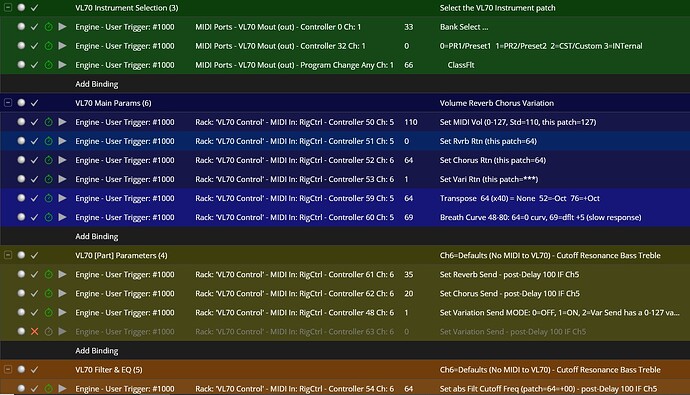

The real-world scenario for me is to pause after sending MIDI to allow my hardware unit (VL70) to complete that task before sending more MIDI requests. However, I see now that this strategy not only pertains to the VL70, but the C4 itself.

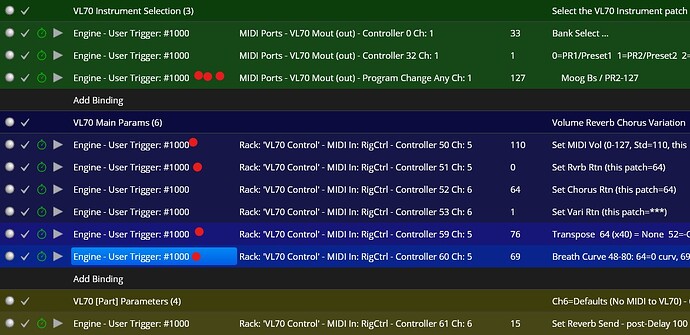

The [F5] scenario fires a prior operation that entails significant work - enabling routes, which fires bindings that cause Controller Bar updates, as well as setting levels which causes changes to gains on routes which fires more bindings, etc etc. That don’t happen in femtosecond time.

I believe I have to treat that complex operation the way I would treat a patch change on the VL70 and add a delay to that prior operation.

However, I am wondering if a system of semaphores might be preferable to delays, for complex tasks involving C4 itself. (see below)

To me, the complexity of additional framework for delays seems daunting. Maybe I’m just not seeing how it would work, and it may be straightforward in the end, but at this juncture it does seem daunting.

That said, I have a history with delays that is not pretty (see below) … so I am wondering if a system of … Semaphores might be preferable …

Semaphores

Basically a semaphore could be declared and grabbed by a binding until the tasks of that binding are complete, and then the semaphore is released. Another, later binding would wait on that same semaphore and be blocked until the semaphore is released.

A queue of waiting bindings would be needed so that the bindings wake up in the order that the bindings attempted to grab the semaphore.

Begin Extended Somewhat-Related Side-trip

Mid 90’s and I’m working for the NY and American Stock Exchanges. Bringing up all the computers needed to run the NY floor started at maybe 2AM. Of course, some machines needed to boot only after other machines booted, or after other machines completed booting, or after other machines booted and the completed some set of tasks (loaded databases, etc).

This boot sequence was controlled by a system (not mine) of timing delays. I would hear comments over my cubicle wall like “OK, just add another 3 second delay to X17354 and that should cover it”.

Then … Black Monday … the system repeatedly failed to come up because some host took longer to boot than expected. The markets opened an hour late, and all the computer folks lost their yearly bonus (which was pegged to the percentage of uptime of the computer systems).

My thought at the time was to develop a semaphore system …

End Side-trip